|

The research direction of the bio-engineering group focuses on the system architecture and development of multi-finger prosthetic hands and rehabilitation robots. The main research areas include the following:

- Design and control of robotic hands

- Development of electromyographic (EMG) signal control systems

- Development of force feedback gloves and virtual reality

- Safety and rehabilitation robotics

- Brain-computer interface

Design and Control of Robotic Hands:

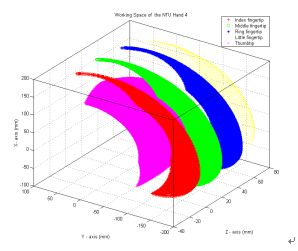

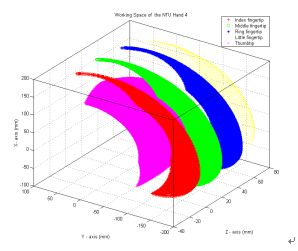

In the design and control of robotic hands, it is essential to ensure that the hand closely resembles the size and weight of a human hand while maintaining dexterity. Through continuous efforts, the latest fourth-generation prosthetic hand, NTU-Hand IV (as shown in Figure 1), features 11 degrees of freedom, weighs approximately 654 grams, and its workspace is illustrated in Figure 2.

Figure 1. NTU-Hand IV

Figure 2. Workspace of NTU-Hand IV

Development of Electromyographic (EMG) Signal Control System:

In the research of EMG control systems, electrical signals generated by muscle contractions are captured and processed to extract features. These signals are then analyzed using neural networks to recognize intended movements, allowing amputees to control robotic hands directly using their residual muscles. The current recognition and control system is implemented using TI 3x DSP. In the future, to reduce circuit size and weight, SoC technology will be utilized to integrate the entire recognition and control system into a single chip.

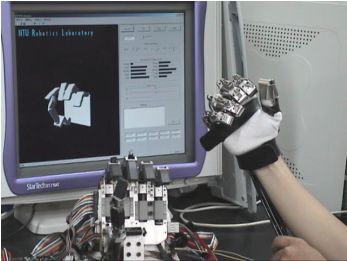

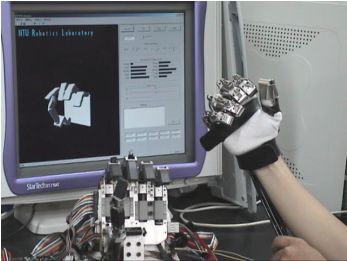

Development of Force Feedback Gloves and Virtual Reality:

In the development of force feedback gloves and virtual reality, we use steel wire mechanisms to control the operator”¦s position and force. The data is transmitted to a computer via RS-232 and then sent to the prosthetic hand, enabling the operator to remotely control its movements and force. At the same time, the glove can reflect the state of the prosthetic limb, as shown in Figure 3.

Figure 3. Data Glove and Virtual Reality

Safety and Rehabilitation Robotics:

Due to the aging society and declining labor productivity, the next generation of robots must be able to interact physically with users in a direct and natural manner. Therefore, we focus on issues related to human-robot interaction, particularly safe physical interactions, by developing an intelligent safety robot system. This system is designed to operate in unstructured environments while considering user intentions, robot performance, and interaction safety. The research consists of five main topics:

- Development of safety interaction and system performance standards

- Intrinsic safety actuation methods and mechanical design

- Impedance control for compliant joint robots

- Human intention recognition based on vision systems

- Real-time safe motion planning and control strategies

The ultimate goal of our laboratory is to integrate the above research topics into a practical robotic system. This system will feature an intention recognition module composed of facial expression recognition, joint attention, and behavioral decision-making. By incorporating safety mechanisms, advanced control theories, and real-time safe motion planning and control strategies, we aim to achieve autonomous and safe human-robot interaction.

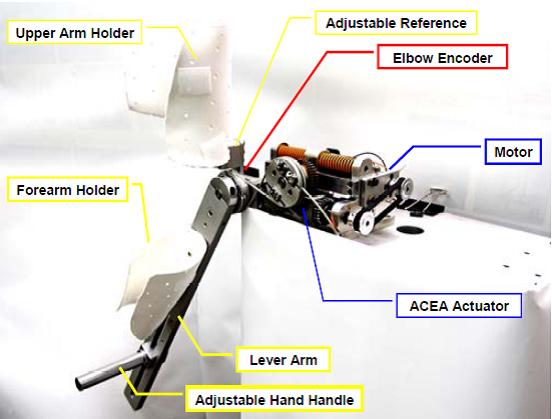

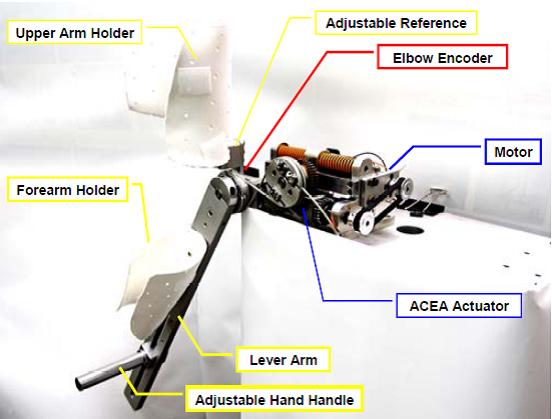

Furthermore, in addressing both active and passive rehabilitation as well as strength augmentation, we first conduct theoretical analysis on generalized human-machine systems. We have developed a flexible coupling-driven robotic system, which allows real-time interactive force output adjustment for different tasks. By utilizing pre-set or dynamically adjustable parameters, this system can generate specific motions or optimized behaviors tailored to different patients. This ensures efficient, safe, and comfortable interactions, particularly benefiting patients with spasticity.

In the future, based on this concept, we plan to design a full-body strength-enhancing robotic system that can assist both healthy individuals and those with physical impairments, helping them improve their movement and load-bearing capabilities.

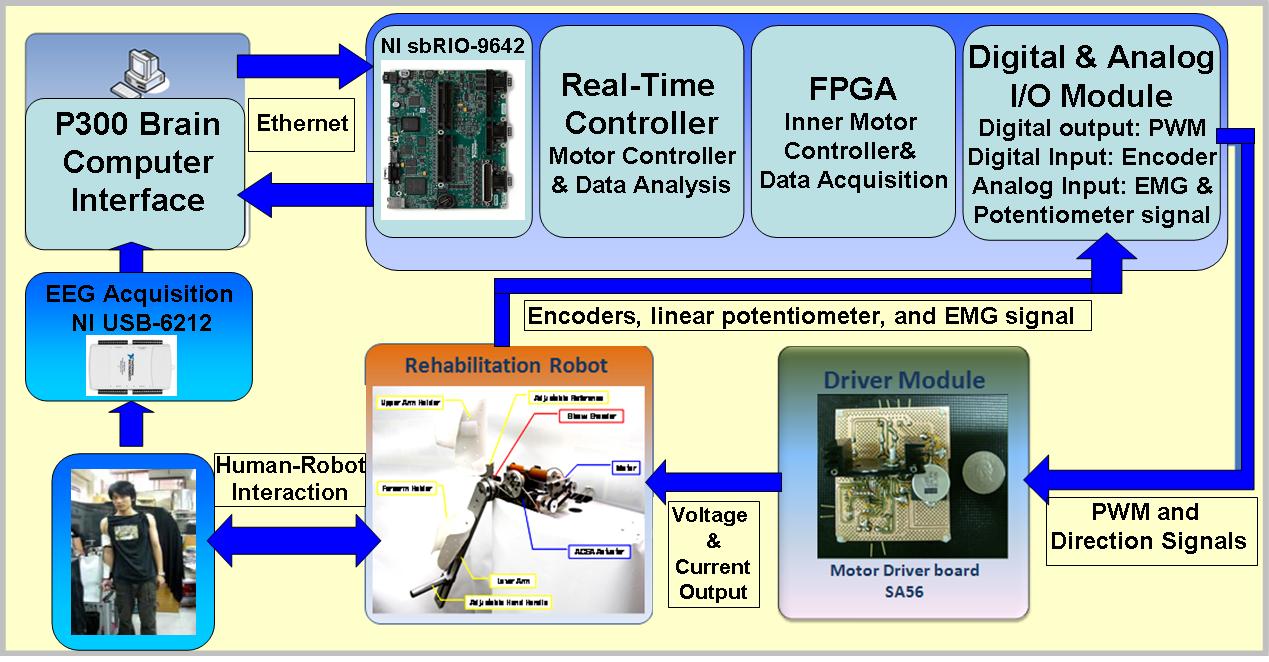

Brain-Computer Interface:

In recent years, many laboratories have begun researching brain-external communication interfaces to enable humans to communicate with the external world using brainwaves. The advantage of using brainwaves is that they serve as the only viable control signal for individuals with severe conditions such as stroke, ALS, mobility impairments, or neuromuscular disorders. Even if a patient is unable to move, they can actively control their environment through a brain-computer interface.

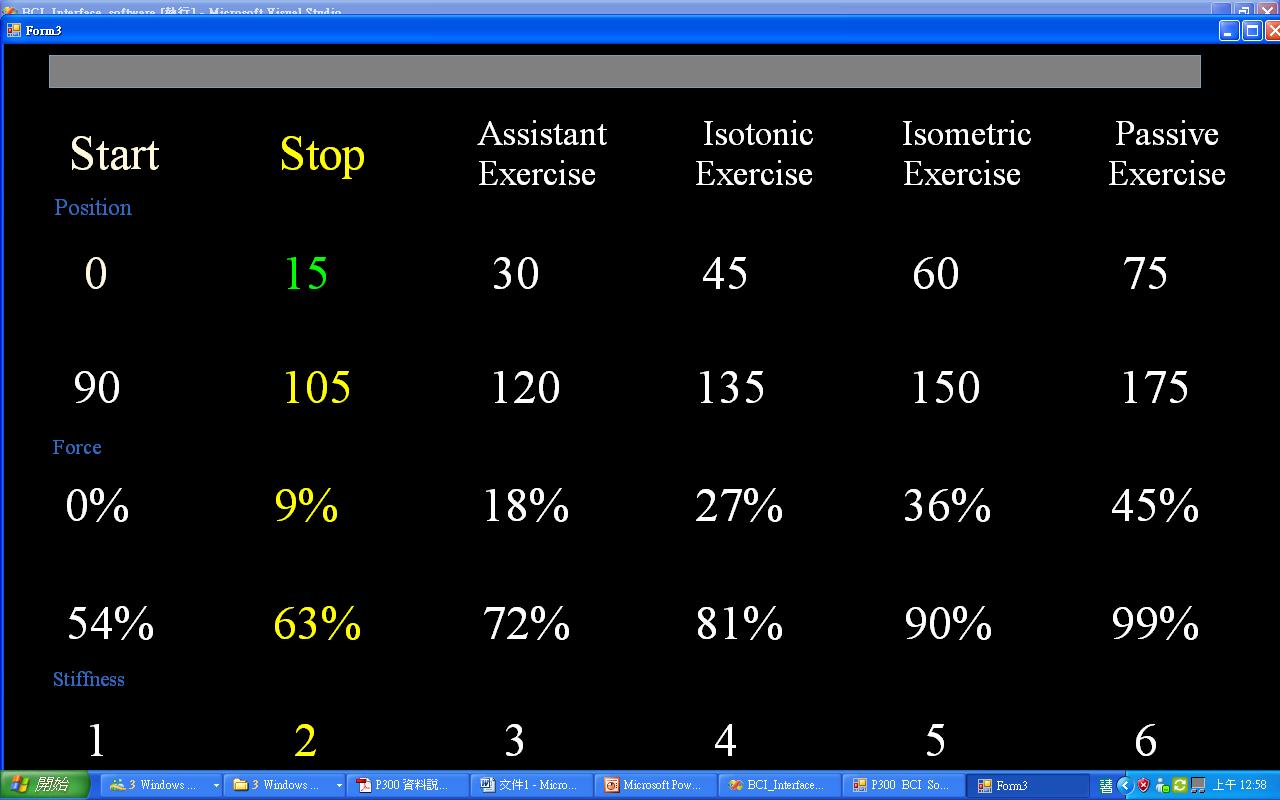

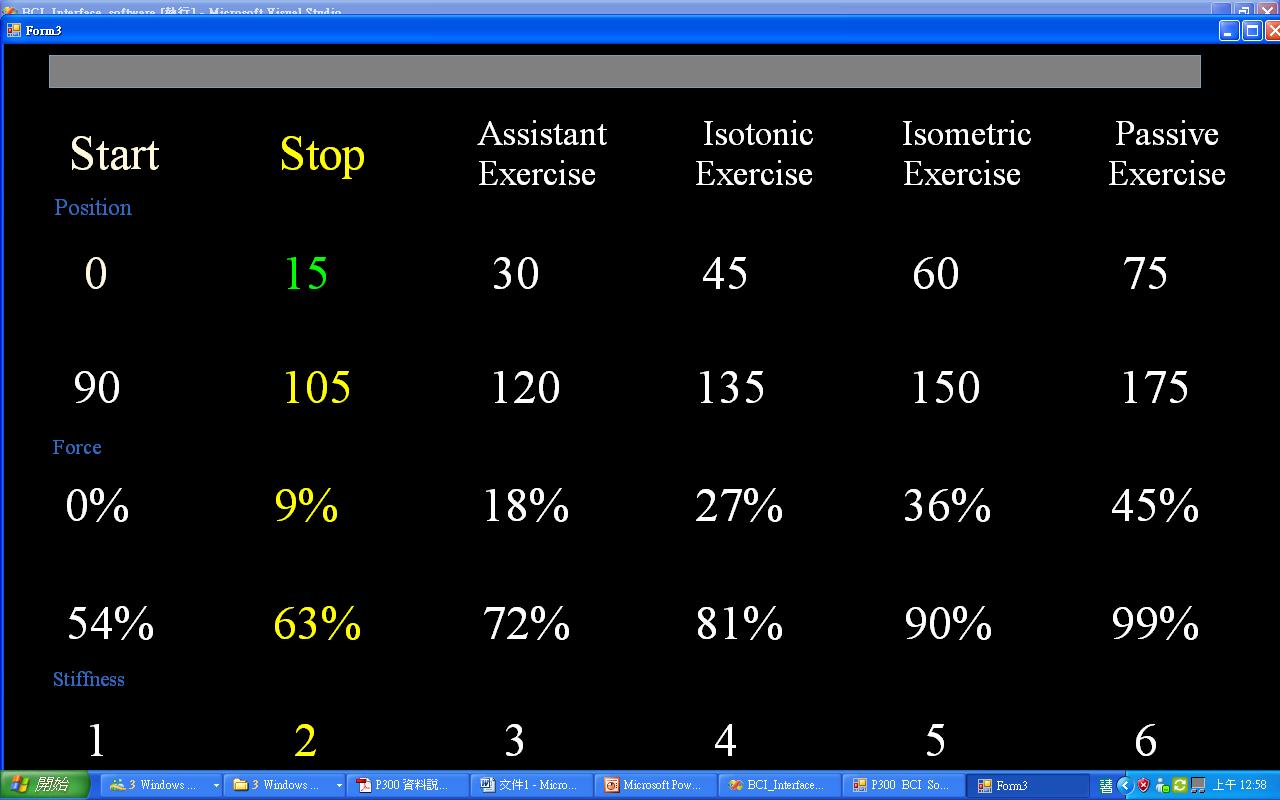

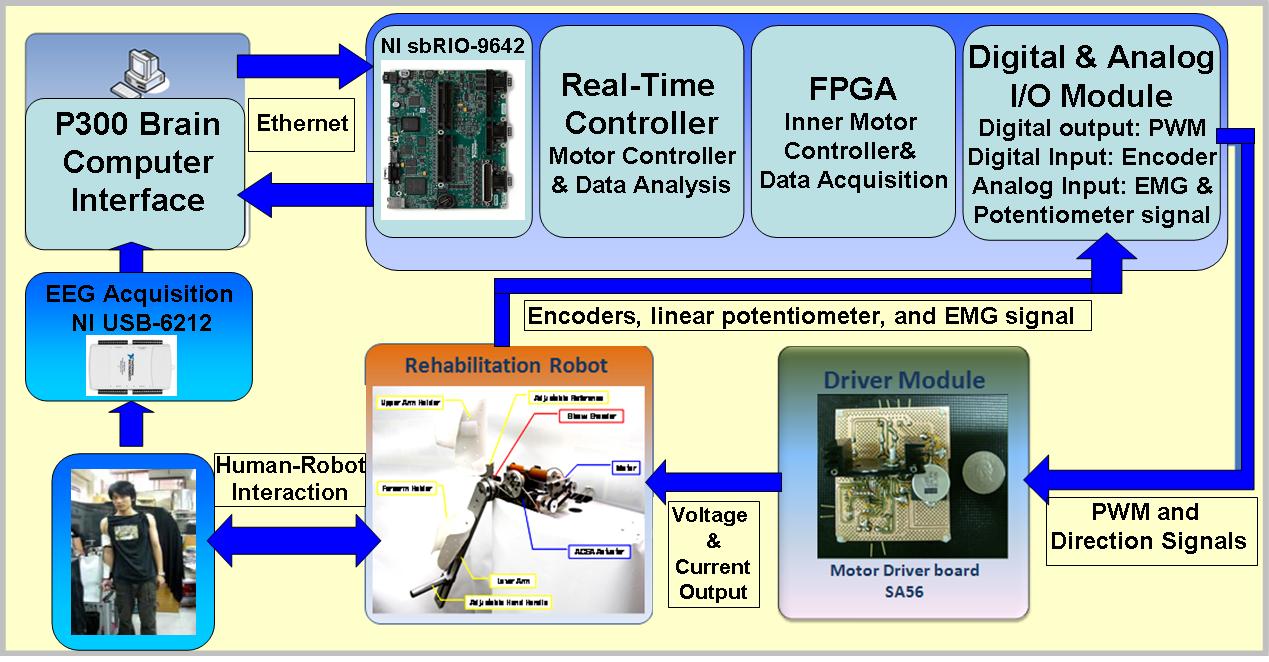

Therefore, our laboratory utilizes the P300 method of Brain-Computer Interface (BCI), which works by using flashing stimuli to evoke P300 brainwave signals. After processing these signals using recognition algorithms, patients with severe conditions such as stroke or spinal cord injuries can control an elbow rehabilitation device, enabling them to perform rehabilitation and assistive movements independently. Currently, the P300 recognition accuracy can reach up to 100%, with an average recognition time of 30 seconds per character.

To enhance usability, our future goal is to reduce the time required for recognizing each character while also exploring brainwave classification without external stimuli, such as imagined motion classification. We also aim to expand brainwave applications, such as controlling a seven-axis robotic arm or a wheelchair using brainwave signals, enabling individuals to lead a more independent and improved life.

Figure 4. Control Panel

Figure 5. Rehabilitation Mechanism

Figure 6. Brain-Computer Interface and Rehabilitation System Architecture

|